TEDAI celebrated its European debut in Vienna, and over three days, delegates were led through learning journeys and listened to some industry heavyweights on topics such as regulations, deepfakes, brain-computer interfaces as well as how Artificial Intelligence was used to win the Vesuvius Challenge – a seemingly impossible task of unveiling text hidden in rolled-up scrolls which were carbonized in 79 AD.

The recordings will become available over the next few weeks, but I would like to cover the sessions that I found most interesting now.

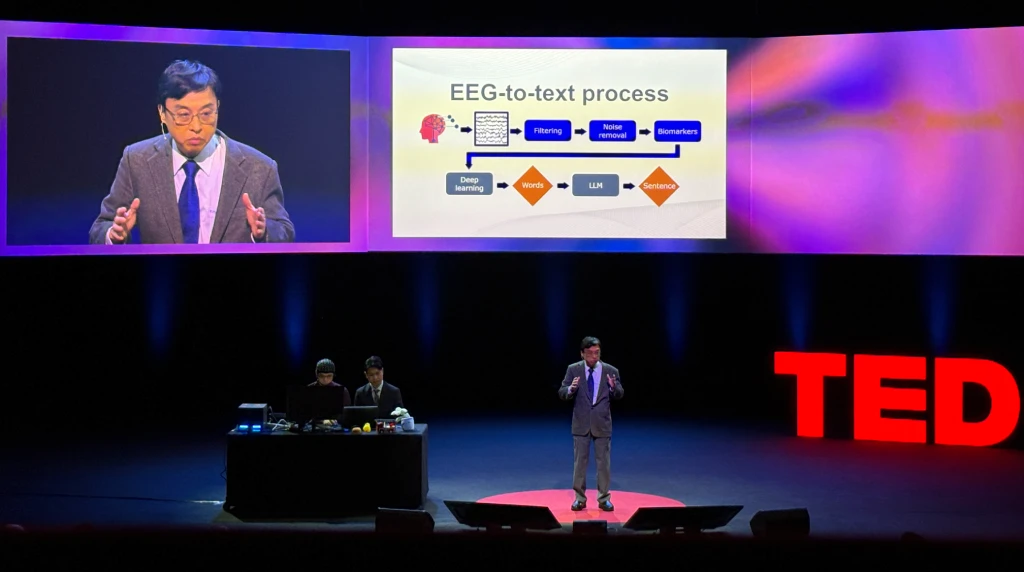

When I was reading about the planned presentations, I was immediately drawn to the one from Chin-Teng Lin for brain-computer interfaces (BCI). The challenge is reading and translating brain signals, electroencephalography (EEG), to text. This area has been gaining traction in the last few years. The devices are designed to enable a direct communication pathway between the brain and external devices, primarily computers or robotic limbs. They have attracted attention due to their potential applications in medicine, rehabilitation, and human augmentation. So, I was very pleased to see his session premiere.

Chin-Teng Lin

Chin-Teng was very clear in explaining that the accuracy of their current device is around 50% in the detection of non-spoken words and we were treated to a live demonstration of a device worn by one of his students, whilst they thought through several objects and sentences. We all have our own unique different neural signatures, so it may be a case of fine-tuning the device to the user. The demonstration was indeed only around 60% accurate, a hamburger was incorrectly identified when shown another object – possibly the wearer was hungry.

Whilst these devices pose a threat to privacy, the wearer could disable or remove them when they have no desire to share what they are really thinking, the potential for enabling locked-in syndrome and other disabilities is exciting.

Youssef Mohamed Nader and Julian Schilliger

The next session that interested me was from Youssef Mohamed Nader and Julian Schilliger. They discussed how they cracked the Vesuvius scrolls (also known as the Herculaneum papyri). The scrolls were carbonized when Mount Vesuvius erupted in 79 AD, engulfing the villa and preserving the library in a layer of volcanic material.

The Vesuvius Challenge offered prizes to researchers who could develop methods to read the scrolls. In 2024, a team of three researchers won for successfully deciphering portions of a scroll using Artificial Intelligence. It took them nine months to achieve this feat. I have to admit that I would have lost interest after one month, so I admire their perseverance. As more of the scrolls are decoded this year, they continue unveiling insights into the classical world.

Using Artificial Intelligence, the team analysed the scans to distinguish between ink and papyrus and of course, there was the additional challenge – the scrolls could not be unwrapped as they would disintegrate.

Cara Hunter

Cara Hunter, an Irish politician shared her personal story of being a victim of a Deep Fake video and how this affected her life. “When AI erodes truth, it erodes trust”. How would you explain to your father, that what he has seen has been generated by Artificial Intelligence? How do you handle your elderly constituents who are unaware of technology and evil actors? It was a raw and engaging story, which Cara courageously presented.

Shaolei Ren

From Shaolei Ren, we were enlightened on water consumption by Artificial Intelligence and currently 500ml of water is consumed by 10 – 15 AI queries. Water is required for data centre and power plant cooling as well as the production of computer chips. Larger models have a larger environmental impact.

We don’t fully understand Artificial Intelligence water consumption. Whilst some companies are turning to Nuclear Power for energy, this is very water-intensive. Shaolei is active in research on sustainable computing, especially for how to reduce water consumption at data centres. He has been instrumental in industry advances like real-time water footprint reporting tools.

Read about more speakers and their sessions in the next article.

Speakers Included Here

Chin-Teng Lin, Professor & Co-Director – Australian AI Institute

Youssef Mohamed Nader, Machine Learning Researcher – Free University Berlin

Julian Schilliger, Digital Archaeologist – Scroll Prize

Selena Deckelmann, Chief Product & Technology Officer – Wikimedia Foundation

Cara Hunter, Politician – Member of the Northern Ireland Assembly

Shaolei Ren, Computer Scientist – UC Riverside